MiDaS Models

There are variety of different MiDaS models available. To be able to use them with Unity Sentis, the official models were converted to ONNX using this colab notebook. You'll find links to the pretrained models in ONNX format below or on the GitHub Release page.

Overview

| Model Type | Size | MiDaS Version |

|---|---|---|

| midas_v21_small_256 | 63 MB | 2.1 |

| midas_v21_384 | 397 MB | 2.1 |

| dpt_beit_large_512 | 1.34 GB | 3.1 |

| dpt_beit_large_384 | 1.34 GB | 3.1 |

| dpt_beit_base_384 | 450 MB | 3.1 |

| dpt_swin2_large_384 | 832 MB | 3.1 |

| dpt_swin2_base_384 | 410 MB | 3.1 |

| dpt_swin2_tiny_256 | 157 MB | 3.1 |

| dpt_swin_large_384 | 854 MB | 3.1 |

| dpt_next_vit_large_384 | 267 MB | 3.1 |

| dpt_levit_224 | 136 MB | 3.0 |

| dpt_large_384 | 1.27 GB | 3.0 |

Get The Models

To keep the package size reasonable, only the midas_v21_small_256 model is included with the package when downloading from the Asset Store. To use other models you have to downloaded them first.

When you create an instance of the Midas class you can pass the ModelType in the constructor to choose which model to use. In the Unity Editor if you're about to use a model that is not yet present, you will automatically be prompted to allow the file download.

Otherwise you can always manually download the ONNX models from the links above and place them inside the Resources/ONNX folder.

Which Model To Use

Overview

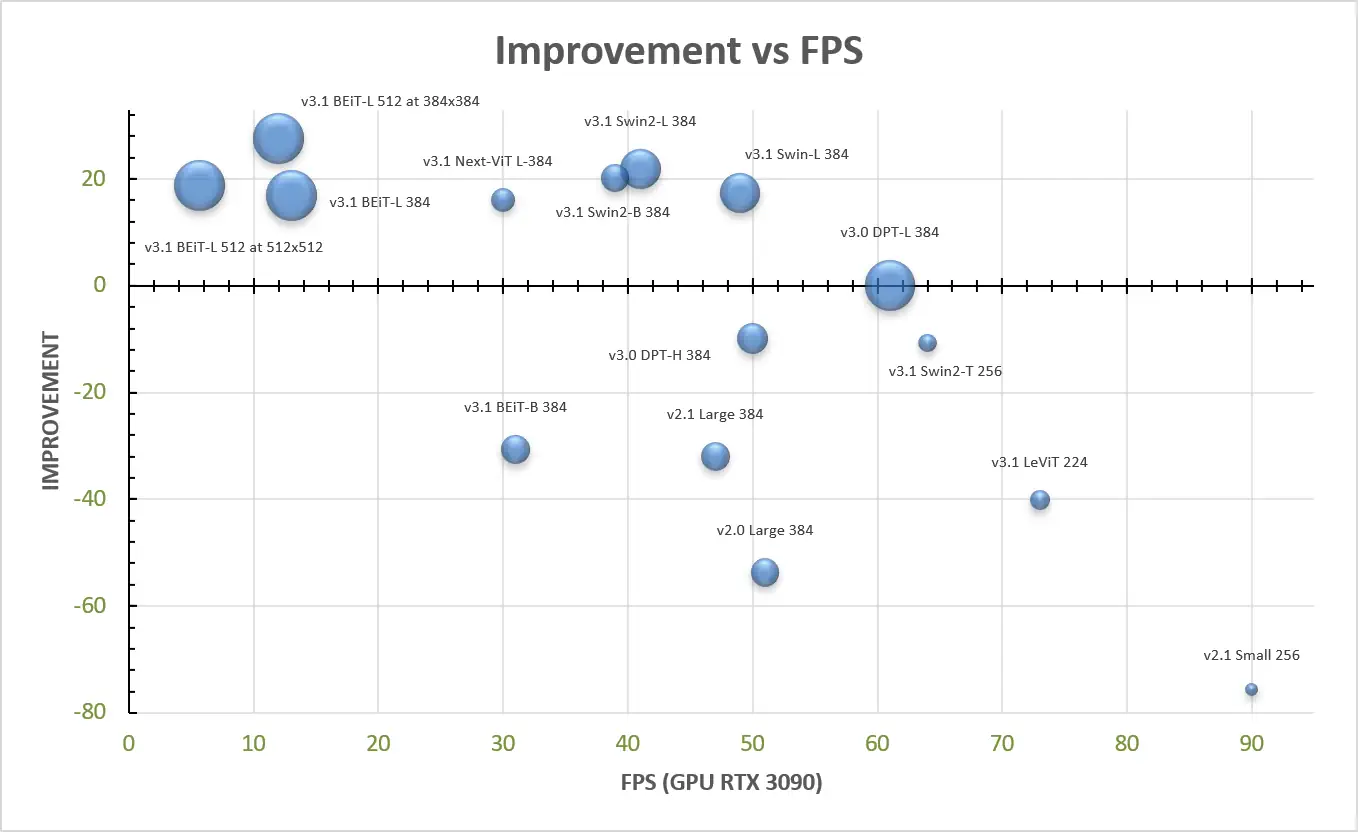

You should choose the appropriate model type based on your requirements, considering factors such as accuracy, model size and performance.

The available models usually have a tradeoff between memory & performance and the accuracy/quality of the depth estimation.

The official MiDaS documentation does a fairly good job of showcasing the different capabilities, so I'll just copy the performance overview here:

Generally, if you have a hard realtime requirement, e.g. when you want to do depth estimation at runtime or on mobile devices, you may want to use smaller models like midas_v21_small_256 or dpt_swin2_tiny_256. If you need best quality (editor tools) or depth estimation needs to happen just once, you might be able to use models like dpt_swin2_large_384 or dpt_beit_large_384. Keep in mind the larger memory requirements for these model though.

Examples

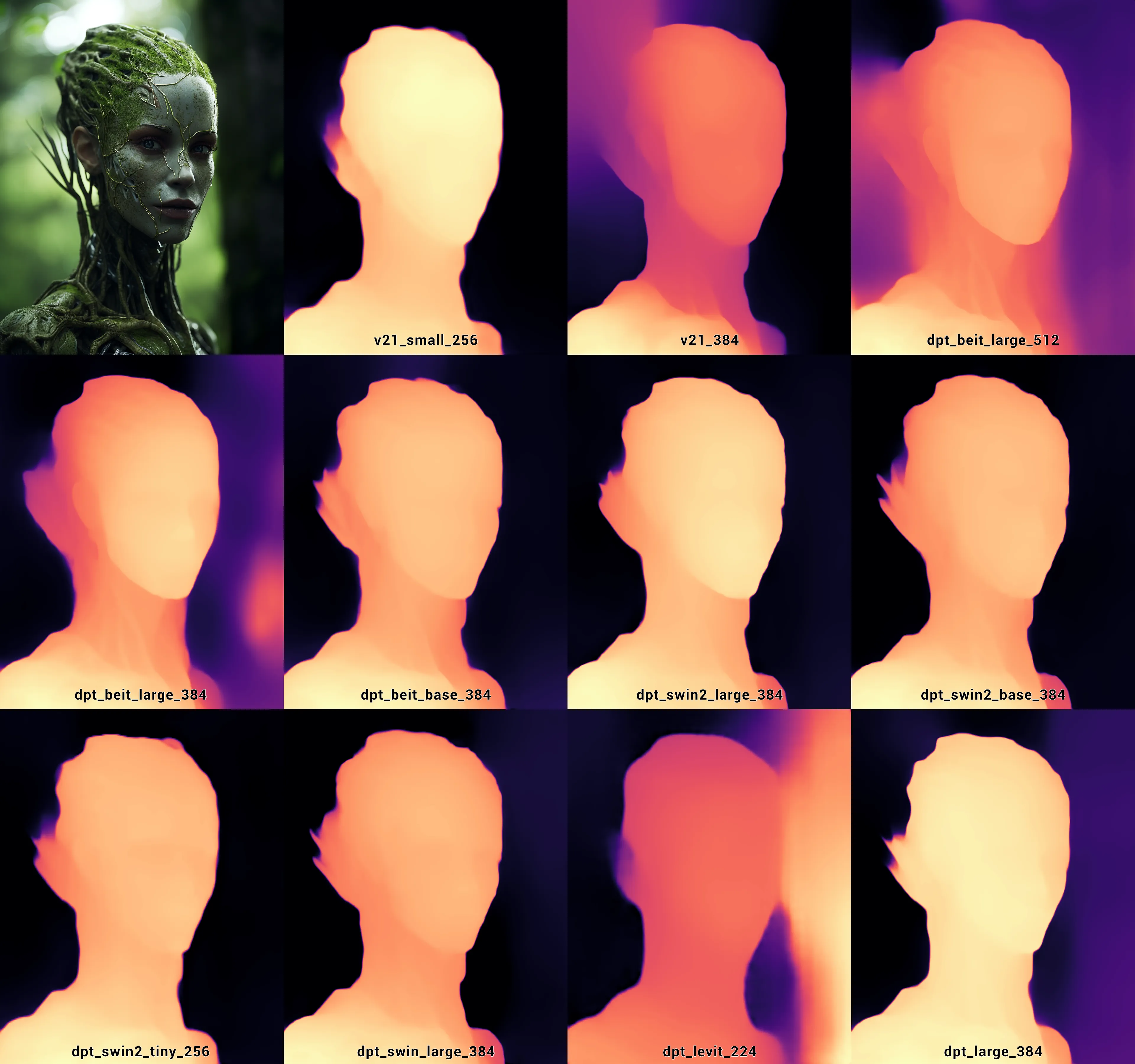

The comparison below is only meant to give a rough overview of the capabilities of each model.

Judging the quality of a depth map from just a 2D image is hard. Consider using the WebcamSample that is included with the package to see how the different models perform when when projecting the estimated depth back into 3D as a point cloud.